Imagine a child asking her father how a car works. “What makes it go?” she asks him. He tells her that inside the car, there is a smaller car driving on a treadmill, which is connected to the wheels of the bigger car. As the little car drives on the treadmill, the big car drives on the road.

Of course, he is just teasing her with an absurd explanation. Cars don’t have little cars inside them, but even if they did, this “explanation” isn’t really an explanation. To explain what makes the big car go, he would have to explain what makes the little car go. The explanation presupposes what it is supposed to explain: cars that go.

But it has the form of an explanation, and it might trick the little girl into believing that her question has been answered.

A more typical example of the homunculus fallacy is the use of a “little man in the head” to explain aspects of consciousness. For example, someone might “explain” vision as “the mind’s eye is looking at a screen in the brain”. This is not an explanation of vision, because it presupposes vision. Even if this described some brain process metaphorically, it would not explain vision. We would now have to explain how the “mind’s eye” can “see”.

An explanation of vision should be in terms of lower-level mental processes that are mechanistic and do not presuppose vision. An explanation of how a car moves should involve objects and processes (pistons, gears, combustion, etc.) that are not cars.

Explanations typically describe hidden mechanisms and invoke general principles that define how those mechanisms work. For example, we can explain the movements of the planets by a hidden mechanism (gravity) that operates by general principles (the law of gravitational attraction and Newton’s laws of motion). This explanation not only allows us to predict the movements of the planets, it also relates them to many other things, such as a stone falling on the Earth. It shows how something specific (planetary motion) is an instance of something very general (gravity and motion). Newton’s theory of gravity and motion has explanatory power, because it can be used to generate such explanations for many things. It reduces complexity by describing and predicting many things with a small number of abstract concepts.

Of course, one can always ask “Why does the world work that way?”. We could seek an explanation for Newton’s theory. We could try to reduce it, and other theories, to an even more general theory. But we would always be left with some theory, and we could always ask for a deeper explanation.

The homunculus fallacy is a pseudo-explanation: an explanation that does not explain, because it does not reduce what it tries to explain to a more abstract level of description. Instead, it posits a hidden mechanism at the same level of description. This hidden mechanism is typically what it supposedly explains. (The little car inside the bigger car, for example.) Normally this is done through a metaphor, and the person committing the fallacy is not aware of their error. They may find it hard to recognize the error, because the metaphor has become entrenched in their way of viewing things.

It is very common to think of human motivation and action as pursuing pleasure and avoiding pain. In this view, we want pleasure and we don’t want pain, so we act to pursue pleasure and avoid pain. This view is very intuitive. However, it is based on a homunculus metaphor, and it contains a homunculus fallacy.

Pursuit and avoidance are behaviors. They presuppose motivation and action conceptually. Behavior is what a theory of human motivation and action is supposed to explain. So, we cannot explain human motivation and action with the concepts of pursuit and avoidance. The use of “pursuit” and “avoidance” to describe mental processes is a metaphor. This metaphor is misleading, because it presupposes motivation and action.

We can think of the motivation system as a little man in the head who chases pleasure on a treadmill, and runs away from pain, but this gets us nowhere. Even if this accurately described something about the brain, we would still have to explain why the little man wants pleasure, and why he doesn’t want pain. We would have to explain his motivations.

To explain human motivation and action, we must describe a mechanism that does not have the properties (motivation and action) that we want to explain, and that mechanism should operate by more general principles.

Motivation cannot be explained in terms of motivation. Consciousness cannot be explained in terms of consciousness.

The homunculus fallacy “explains” a system by positing a part of the system that has the properties of the whole system. It conceptually presupposes what it claims to explain.

There is an inverse of the homunculus fallacy. The inverse homunculus fallacy “explains” a system by positing that it is a part of a bigger system that has the properties of the smaller system. It also presupposes what it claims to explain, but by projecting a part onto the whole, rather than the whole onto a part.

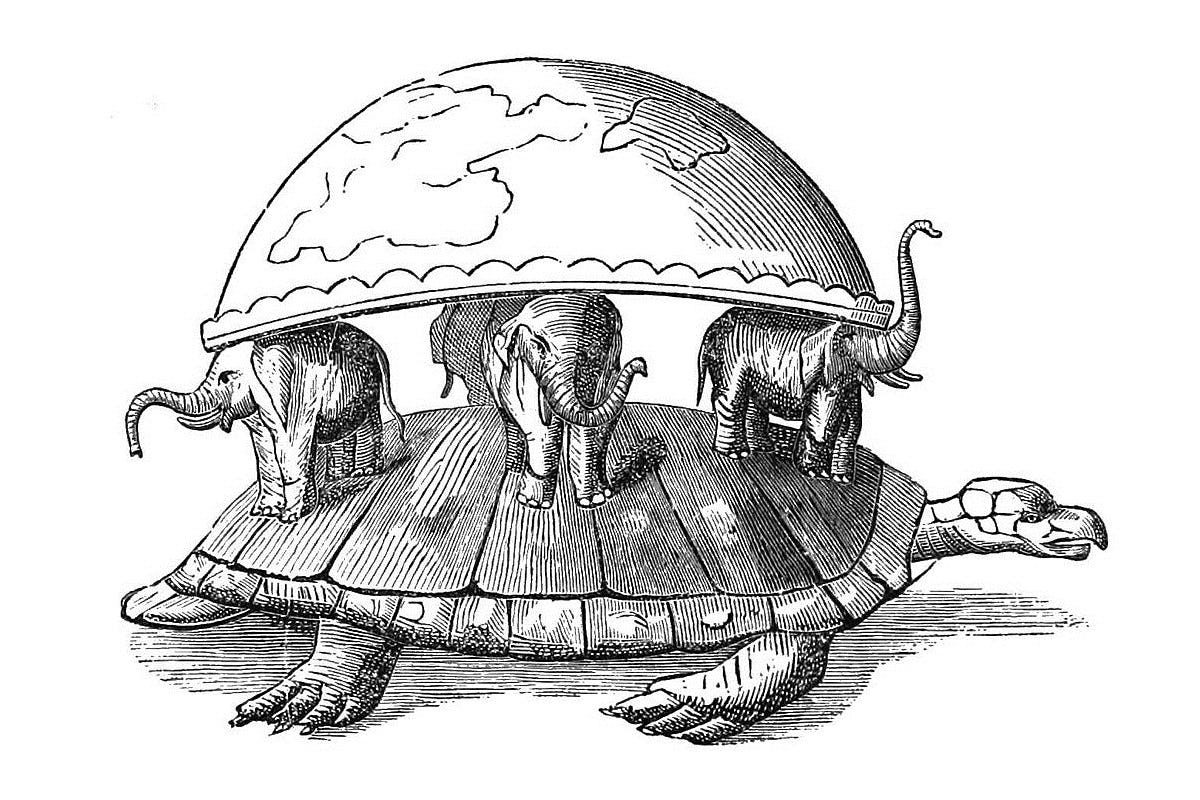

The classic, absurd example of the inverse homunculus fallacy is the “world-turtle”: the theory that the world sits on the back of a giant turtle.

See World Turtle on Wikipedia.

We can imagine how this sort of pseudo-explanation arises. A little girl asks her father “what holds the world up?”.

This is a perfectly reasonable question for a little child or an Indian peasant in 500 AD. In ordinary life, objects fall unless they are held up by something, or actively kept up by some process, such as wings flapping. So, our ordinary intuition is that things fall. Today, most people know that the Earth is a ball in space, and that up and down are not absolute directions, but are relative to the center of the Earth. Without this modern knowledge, it is quite natural to think of the Earth as unmoving, and up and down as absolute directions. It is also quite natural to wonder what lies below.

The father of the little girl tells her “The world is sitting a turtle’s back”.

Of course, this explains nothing. A turtle is an object that exists on the Earth. Turtles are normally held up by the ground or by water when they are swimming. They have something underneath them. They need air, water and food. The idea of a turtle presupposes the Earth as a whole. Our ordinary assumptions about how the world works are hidden inside the concept of a turtle. But those ordinary assumptions are what we are trying to explain.

The little girl might ask “What holds the turtle up?”. The philosophical joke-answer is that it is turtles all the way down. The father could say that the turtle is standing on another turtle. Or he might say that the turtle is standing on the surface of a bigger world. Either way, the “explanation” does not explain.

A turtle-believer might insist that the turtle theory does explain things. For example, it explains earthquakes: those are caused by the turtle moving. But then why aren’t earthquakes always happening? Why do they happen in specific places? What are volcanoes caused by? Why aren’t there periodic floods when the turtle goes for a swim? And so on. Just because a theory is consistent with some (cherry-picked) data, that doesn’t mean the theory has explanatory power. It must reduce the complexity of the data to have explanatory power.

God is another example of the inverse homunculus fallacy. Supposedly, the concept of God explains various things, such as the existence of the Earth, the existence of life, human nature, rationality, etc. But the concept of God presupposes those things. God is a metaphorical person. He has the properties of a human being. He exists. He is alive. He has desires. He thinks and acts.

The Christian notion of God is analogous to the world-turtle. It tries to explain the whole (the universe) in terms of something that exists within the universe: a person. Of course, the imaginary person of God is BIG in various ways, just as the turtle is big. God doesn’t just think and act. He is omniscient and omnipotent!!

One could use the same trick with the world-turtle, by claiming that the turtle is infinitely big. Then there would be nothing underneath the turtle. It would be “turtle all the way down” instead of “turtles all the way down”. Of course, it still wouldn’t explain anything.

Like turtles, human beings exist on the Earth, and our properties are tied to our way of existence. Mental operations, such as thought, desire and action, are ways of solving the problems of living beings. They are adaptations. It makes no sense to extend them to infinity, or attribute them to a universal being.

If God is omnipotent, then there is no distinction between his will and reality. But will is the desire to change reality. Omnipotence is an absurd notion, or it is just a confusing way to say “causality”. Likewise for omniscience. If God is omniscient, then there is no distinction between his knowledge and reality. But knowledge is limited information about reality, not reality itself. Just as “up” and “down” are only meaningful on the Earth (or some other large body), “knowledge” and “will” are only meaningful for limited beings, such as us.

See To EyesWideOpen for more about the conceptual incoherence of the God-concept.

If we eliminate the human properties of God, exchanging “will” for “causality” and “knowledge” for “existence”, then we have exchanged “God” for “reality”. Any apparent explanatory power of the God-concept comes from conceptual question-begging, through the metaphor of God as a human being.

The cosmological arguments for God involve the inverse homunculus fallacy. They insist that we must posit God to explain reality, but their notion of God presupposes the properties that are “explained”. Just as the world-turtle smuggles in ordinary assumptions about objects and up | down, God smuggles in ordinary assumptions about reality.

“All is mind” is a more modern, sophisticated version of the world-turtle. Supposedly, the human mind is explained by claiming that everything is mind. This uses the human mind as a metaphor for the whole of reality. Of course, the metaphor smuggles in all the properties of the human mind. The great virtue of this “metaphysical theory” is that it “explains” the human mind. But of course it does no such thing. It simply shifts the problem of explanation to reality as a whole, just as the world-turtle shifts the problem of what holds the world up to what holds the turtle up.

“The universe is a computer (program)” is another example of the inverse homunculus fallacy. It applies the metaphor of a computer to the universe as a whole. But of course a computer is something that exists within the universe. It is a mechanism that operates according to physical laws. So, nothing is explained by positing that the universe is a computer or a computer program.

Subtler versions of these fallacies might be involved in certain scientific theories and problems. For example, the “selfish gene” is a homunculus metaphor, and (as I argued in Debunking the Selfish Gene) a misleading one.

We can’t avoid using metaphors, and metaphors will normally be at the ordinary level of description. We’re often projecting ordinary things “upward” or “downward” to explain things at larger or smaller scales. People can create circular explanations with metaphors, and such errors can be difficult to spot.

Many people (and not just little girls) have been tricked by these fallacies. To avoid them, you have to think carefully about the presuppositions of the concepts that you use.

> “The universe is a computer (program)” is another example of the inverse homunculus fallacy. It applies the metaphor of a computer to the universe as a whole. But of course a computer is something that exists within the universe. It is a mechanism that operates according to physical laws. So, nothing is explained by positing that the universe is a computer or a computer program.

Of course, "world is a simulation" doesn't explain much. That it is not explanatory doesn't mean it's not true (doesn't mean it is, either). It's somewhat unfair to compare it to God or turtles, since people who bring it up usually are not claiming it does.

Inside our very real computers, we do a ton of virtualization nowadays. Computers inside computers inside computers. Also, we run stuff on them, including virtual realities, because there are reasons to do so.

Ofc occam's razor cuts computer out of the equation, so it makes no sense to believe it's a simulation without evidence. It's not pointless to think about the possibility, tho, for various reasons. One of them is that physics doesn't stop us from, at the very least, hooking the brain's I/O to a computer. We can make "my world is a simulation" true for some person. Well, with some more tech progress.

We can't really simulate our universe inside our universe... probably. But then, in case we are in that person's (from previous paragraph) shoes -- the outer universe is a complete mystery. It doesn't need to be in any way similar to ours.

--------

Selfish gene is just an anthropomorphizing analogy. Mechanics of evolution just map well to the concept. Obviously genes are just dumb molecules at the end of the day, with no conscious desires.

Anthropomorphizing stuff sometimes leads to errors (e.g. when thinking about AI it's easy to anthropomorphize a little (or a lot) too far), but it's not inherently wrong.

I didn't read your book tho, so I'm not saying it's pointless to attack the framework. Maybe sth else makes for a better model/framework than using concept of selfishness.

I didn't really finish these arguments, but I need to go to sleep now.

I didn't know this concept had a name. It's something that bothers me sometimes and I can't always point exactly to it because I've never thought about giving it a name or elucidating it.

One example that always bothers me is pop-science youtube videos that purport to explain the theory of general relativity by putting heavy objects on a rubber sheet and noting how things move when placed on it. Obviously the sheets bends due to gravity, and the objects move downwards due to gravity, so they are explaining general relativity in terms of gravity. This is an example of such an explanation: https://www.youtube.com/watch?v=MTY1Kje0yLg

Richard Fyenman handles "what's a magnet?" question by explaining the concept (without giving it a name) when he talks about how it would be foolish to describe magnetic and electric forces in terms of rubber bands, because ultimately the behavior of the rubber bands is explained in terms of these forces. https://www.youtube.com/watch?v=Q1lL-hXO27Q

Now, I disagree with some of the examples you give.

> “All is mind” is a more modern, sophisticated version of the world-turtle. Supposedly, the human mind is explained by claiming that everything is mind.

I'm not sure to what specific theory you are referring, but I might take a guess. There's some theory I've seen floated by some philosophers that everything has some level of consciousness.

Now, I'm sympathetic to this theory. It's not "homunculus" in the same sense that Newton's gravity is not. Gravity presupposes motion. It doesn't explain the existence of motion, it explains the specifics of motion: why do things move this way and not that way.

In the same vein, to have any viable explanation for subjective experience, we must assume that elements of it exist all the time everywhere, so that the question becomes: how does the brain weild this property (that just exists in nature) to form specific subjective experiences out of the signals in the nervous system. Without this assumption, there's no reason at all, given all the physics we know of, to predict that any information processing system would ever produce a subjective experience.

> It is very common to think of human motivation and action as pursuing pleasure and avoiding pain. In this view, we want pleasure and we don’t want pain, so we act to pursue pleasure and avoid pain. This view is very intuitive. However, it is based on a homunculus metaphor, and it contains a homunculus fallacy.

Well, it depends on what level of explanation you are talking about.

Someone might be bewildered by their own behavior, not understanding why they do certain things even though they "rationally" would rather avoid them. Here the explanation states that how you behave has less to do with what you rationally decide than what your subconcious mind views as pleasurable or painful. This explanation works because it resolves a problem and helps the person find other ways to change their behavior. Now, I'm not saying this explanation is correct, I actually think it's incorrect (or let's say, misleading, incomplete) and am working on writing an article to elaborate my take on the problem.

Even when you go to a lower level, you have to take "action" for granted: the brain has a mechanism to decide what actions to take. The question then becomes how does it decide what action to take given the unlimited possibilities of things one can do.

Ultimately you have to go to a lower level where "electrical" signals travel through nerves from the brain to the muscles, but this level is not going to be very helpful to understand what motivates human behavior.

> Motivation cannot be explained in terms of motivation. Consciousness cannot be explained in terms of consciousness.

I think a lot of things actually can be explained in terms of a more basic version of themselves. Computers sort of have "little computers" inside themselves: the CPU has cores, each core has "units" that do some "computations" such as addition or multiplication. The job of the CPU is (roughly) to assign work to each unit then take the output from each unit and place it in the right location, and these tasks themselves are performed by other units within the CPU.

Now, the way these units themselves perform the computation is ultimately explained in terms of electrical signals passing through wires and transistors, and transistors are explained in terms of semiconductors. So you do ultimately get an explanation that does not refer to computers, but you have to dig to several layers of abstraction beneath until you hit this ultimate reality that appears to have nothing to do with the higher level.